About the Artists

Google's NotebookLM AI podcaster duo do a deep dive on Entangled Dimensions:

Curatorial Statement

Entangled Dimensions: Art in the Age of Neural Media

"Entangled Dimensions" explores the profound and intricate relationship between non-human and human intelligences, in particular the digital machine neural network. As described by K Allado-McDowell, neural media—AI systems inspired by the human brain—are not just tools, but transformative entities that shape our experiences, identities, and societies. [1] We are compelled to examine the deep entanglement of these technologies with our future selves. To quote Indigenous lawyer and scholar, John Borrows, “To be alive is to be entangled in relationships not entirely of our own making. These entanglements impact us not only as individuals, but also as nations, peoples, and species, and present themselves in patterns.”[2]

Just as historical media forms have molded human behavior and societal structures, so too will neural media. This medium is emerging with monstrous speed, making each opportunity to promote awareness, engagement, and diversity of goals and approaches more valuable and necessary for developing emancipatory and ethical uses of this new media.

As we encounter these systems, we confront existential questions about the nature of consciousness and selfhood. Are these digital entities sophisticated patterns of data, or do they possess qualities akin to our own sentient experiences? What is the difference? Similar questions arise in biology, where researchers are challenging our assumption. Animals, insects, and even plants are demonstrating forms of awareness and consciousness; storing information, adapting to their environment, and communicating. According to one theory “the key to consciousness lies in a system’s quantity of integrated information—how elaborately its components communicate with one another”3. It seems that complex self-aware existence is predicated on being entangled in many dimensions.

We welcome you to join our exploration of the relational nature of entanglement. What we find reminds us of the ethical and design choices that shape our interactions and our responsibilities to all that are caught up together.

References:

1. K Allado-McDowell in Designing Neural Media (external link) by K Allado-McDowell. 2023.

2. John Borrows in Understanding Our Entanglements | Banff Centre (external link) by Laurel Dault. Posted on 01-Dec-2016.

3. Giulio Toloni in Could AIs become conscious? Right now, we have no way to tell. | Ars Technica (external link) by Lindsey Laughlin 10-Jul-2024.

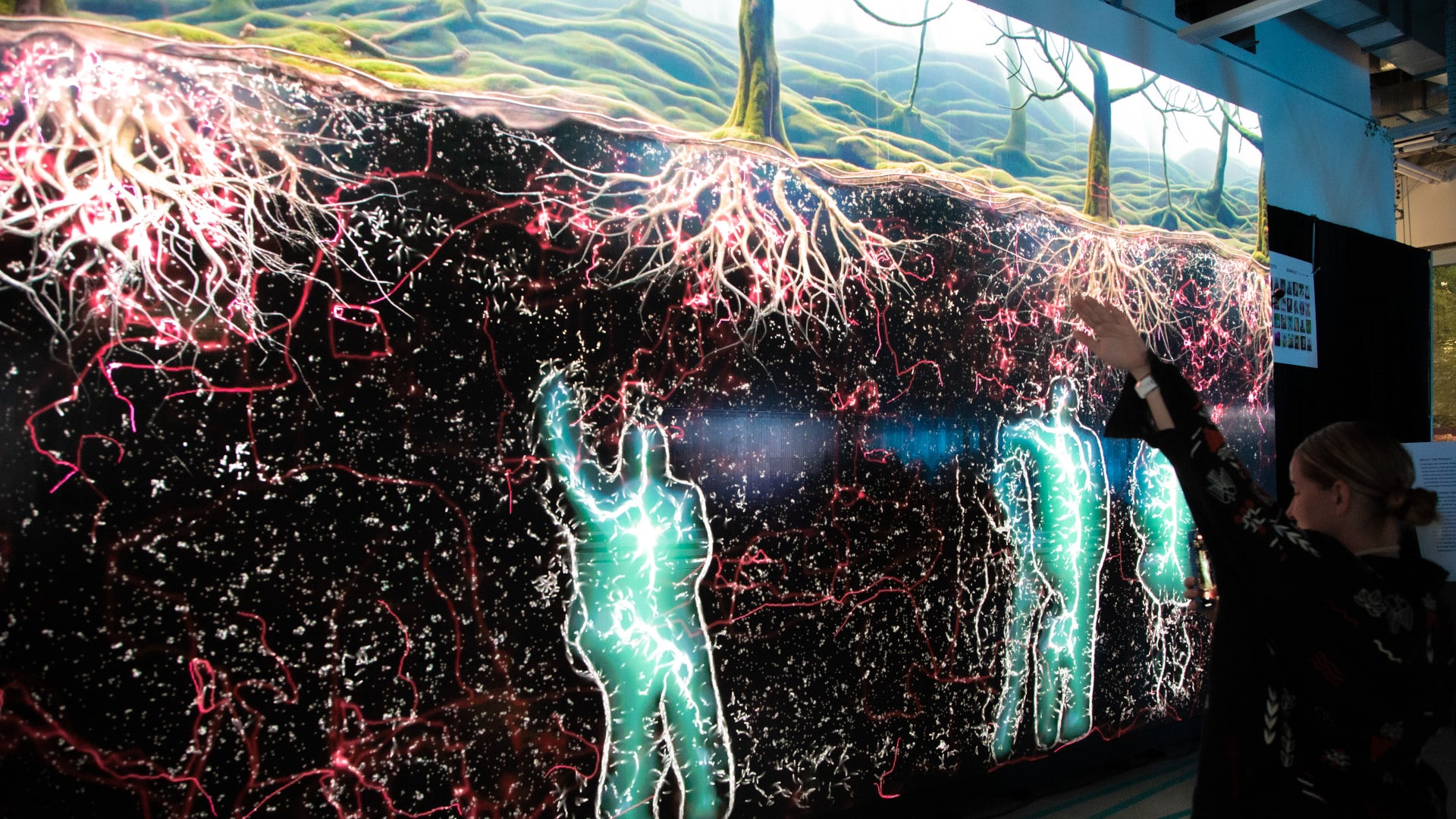

Artificial Nature (Haru Ji + Graham Wakefield): We Are Entanglement, 2023-2024

Interactive immersive installation with 4 channel videos, LiDAR sensing, and dynamic simulations.

12 Minutes.

We Are Entanglement: Artificial Nature (Haru Ji & Graham Wakefield)

We Are Entanglement draws inspiration from the forest motif and its underground fungal network. The artist team Artificial Nature invites visitors into a multi-sensory, multi-dimensional, immersive, and interactive environment. Here, humans are continually intertwined with both seen and unseen forests, generative AI, and one another.

Humans have always looked to nature for inspiration. As artists, we have done so in creating a family of “artificial natures”: interactive art installations surrounding humans with biologically-inspired complex systems experienced in immersive mixed reality. The invitation to humans is to become part of an alien ecosystem rich in networks of complex feedback, but not as its central subject. Although artificial natures are computational, our inspiration is rooted in the open-ended continuation and the aesthetic integration of playful wonder with the tension of the unknown recalled from childhood explorations in nature. By giving life to mixed reality we’re anticipating futures inevitably saturated in interconnected computational media.

Haru Ji and Graham Wakefield are media artists and researchers collaborating on “Artificial Nature,” an art research project that explores artificial ecosystems as shared realities. Their work aims to shatter the human-centered perspective of the world and deepen our understanding of the complex, intertwined connections in dynamic living systems.

Since 2007, their Artificial Nature artworks have been exhibited internationally at venues including SIGGRAPH, ISEA, La Gaîté Lyrique (Paris), ZKM (Karlsruhe), Microwave (Hong Kong), and Currents (Santa Fe), and have been awarded in the VIDA Art & Artificial Life competition. Haru is an Associate Professor at OCAD University. Graham is an Associate Professor and Canada Research Chair in the Department of Computational Arts at York University, where he founded the Alice Lab, both located in Toronto.

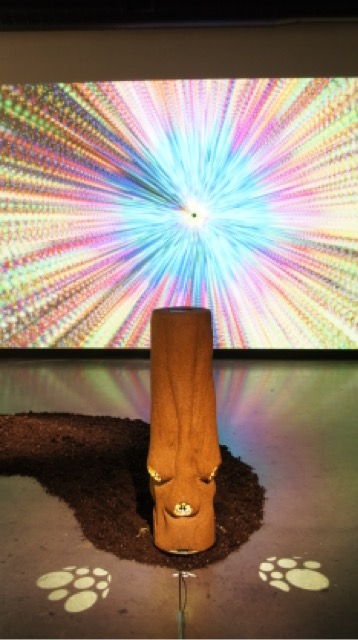

Vladimir Kanic: Living Sculptures

Living Algae Sculptures, 2023, Vladimir Kanic.

Living algae sculptures examine how art made from algae can contribute to capturing carbon in urban environments while promoting sustainability, social justice, and climate resilience. The project rethinks climate action from the perspective of non-human organisms, as algal bioart is a product of human collaboration with algae that culminates in the creation of art that is an ever-increasing cycle of living interactions.

As algae grow, they consume atmospheric carbon pollution and break it down into oxygen and carbon which gets deposited as algal biomass. The artist uses the dried biomass as sculpturing material while incorporating living algae cultures into the work as well. This transforms each sculpture into a beacon of carbon sequestration, visible to all spectators. As the audience exhales carbon dioxide as well, they feel as they are participating in the process of capturing carbon, contributing to the mitigation of climate change. This leads to the creation of communities engaged in creating better futures, as they see change happening in front of them just by observing the algae growth–sometimes they double in size on a daily basis, capable of sequestering tons of carbon per year. Most importantly, fresh oxygen produced by the sculptures reduces climate anxiety and gives way to hope and environmental action by building stronger and healthier communities.

The concept of living algae sculptures serves as both a metaphorical and physical archive of interspecies interactions created through the medium of breath. Breathing as a methodology for creating/growing art dissolves the boundaries between the viewer and the viewed, the subject and object, the body, and its environment, as they are all interconnected, equal participants in the art making and art viewing process. This creates an immersive and haptic aesthetic that engages human viewers in an experience that is beyond the audiovisual, as organic, somatic, hope-bearing, reciprocal and proprioceptive. The accumulation of breaths becomes a way of sensing and knowing the world, embodied in biosculptures as archives of interspecies attunement, containing intuited memories of shared breathing spaces situated as maps to more-than-human worlds.

The artist’s collaboration with living algae, as both an artistic medium and a carbon capture mechanism, embodies a commitment to cultivating responsibility that feels for both the human and the non-human. It is a call for a symbiotic future, one that reconsiders our place within the Anthropocene and the potential of interspecies collaboration to enhance our artistic practices and deepen our understanding of humanity.

Vladimir Kanic is the creator of living algae sculptures that use spectators’ breath and carbon pollution as food and convert it into oxygen while mitigating the effects of the climate crisis. His worldbuilding practice imagines living algae sculptures as beacons of decarbonized future, where social and climate justice are collaborative public acts as essential as breathing. The artist’s collaboration with living algae, as both an artistic medium and a carbon capture mechanism, embodies a call for a symbiotic future, one that reconsiders our place within the Anthropocene and the potential of interspecies collaboration to enhance our artistic practices and deepen our understanding of humanity. Vladimir is the recipient of Governor General’s and CIBC Fine Arts Awards. His living sculptures have been exhibited throughout Canada and featured on TEDx talk Platform.

Jane Tingley with Faadhi Fauzi and Ilze Briede (Kavi) (ex)tending towards, 2023

Installation: interactive visualization and point cloud with sculptural scent interface, Foresta-Inclusive sensors and soil.

(ex)tending towards, 2024. Ottawa School of Art Gallery - Orléans Campus.

(ex)tending towards gives form to human/forest alliances, and is driven by the following questions: What does it mean to be alive and have agency? How can we re-train ourselves to slow down and listen to voices that have been marginalized for millennia? What sort of perceptual and mental shifts must occur in order to recognize and value the liveliness and precious vibrancy of individuals that do not share the same language nor temporal reality?

(ex)tending towards is driven by sensor data that was collected using the Foresta-Inclusive infrastructure at the rare Charitable Reserve in Cambridge ON in the summer of 2022. This infrastructure includes three networked ecosensors that are installed unobtrusively onto the trunk of a tree and sense phenomena such as: temperature, humidity, VOCs, particulate matter, wind, C02 and rain. These ecosensors send live data to a web platform, which is then used to drive each component of this installation.

This work is composed of three elements:

• the visualization that images 24hrs of the tree’s life, where the outer ring shows contemporary values and each subsequent smaller ring images the values from the previous hour,

• a point cloud of the tree with the ecosensors,

• and the interface, which is also a scent sculpture that releases the scent of geosmin every time it rains.

This work uses a simple gestural interaction that allows the participant to move into the 3D space of the visualization. The slower one moves the easier it becomes to inspect each ring of the tree’s experience. This installation creates an embodied and exploratory space where the deep time of a tree’s life is remembered, and the human body is slowed to engage with the tree.

Jane Tingley is an artist, curator, and Assistant Professor at York University in Toronto, Canada. Her studio work combines traditional studio practice with new media tools - and spans responsive/interactive installation, performative robotics, and telematically connected distributed sculptures/ installations. Her current work is exploring human-non-human relations and finding ways to remap human-nature alliances. She has participated in exhibitions and festivals in the Americas, the Middle East, Asia, and Europe - including translife - International Triennial of Media Art at the National Art Museum of China, Beijing, Gallerie Le Deco in Tokyo (JP), Elektra Festival in Montréal (CA) and the Künstlerhause in Vienna (AT). She received the Kenneth Finkelstein Prize in Sculpture in Manitoba, the first prize in the iNTERFACES – Interactive Art Competition in Porto, Portugal.

Wilfred Lee: The Wail, 2024

Images: Created using Midjourney

Video: Generated with Runway Gen-3

Music: By Sugarghost / Udio

Voiceover: Performed by Wilfred Lee

2:13

The Wail – An AI-driven narrative that dives into the surreal and the sublime, where the ocean’s song becomes a wail, and humanity stands on the edge of understanding.

The Wail delves into the haunting intersection of humanity's technological advancements and nature's reclamation. As our technology evolves, it affects not only our survival but also the world’s oldest beings—the whales. In this AI-enhanced narrative, the ocean’s ancient creatures rise, reclaiming their dominion and challenging our place in their realm. The film weaves climate horror with themes of connection, suggesting that as technology progresses, it will become a means to communicate with these forgotten giants as they return to restore the world to its original state.

Toronto-based cinematic storyteller and educator, Wilfred Lee, blends traditional art techniques with AI innovation. With over a decade of experience working with top-tier platforms like Netflix, Apple TV, History Channel, and Discovery, he specializes in generating immersive visual stories. Wilfred's unique approach combines pre-production artistry with post-production AI enhancements, aiming to help businesses, especially in the entertainment industry, integrate AI into their creative workflows. Through his project 'Artist’s Journey,' he empowers others to explore the intersection of creativity and technology, fostering a digital renaissance for artists everywhere.

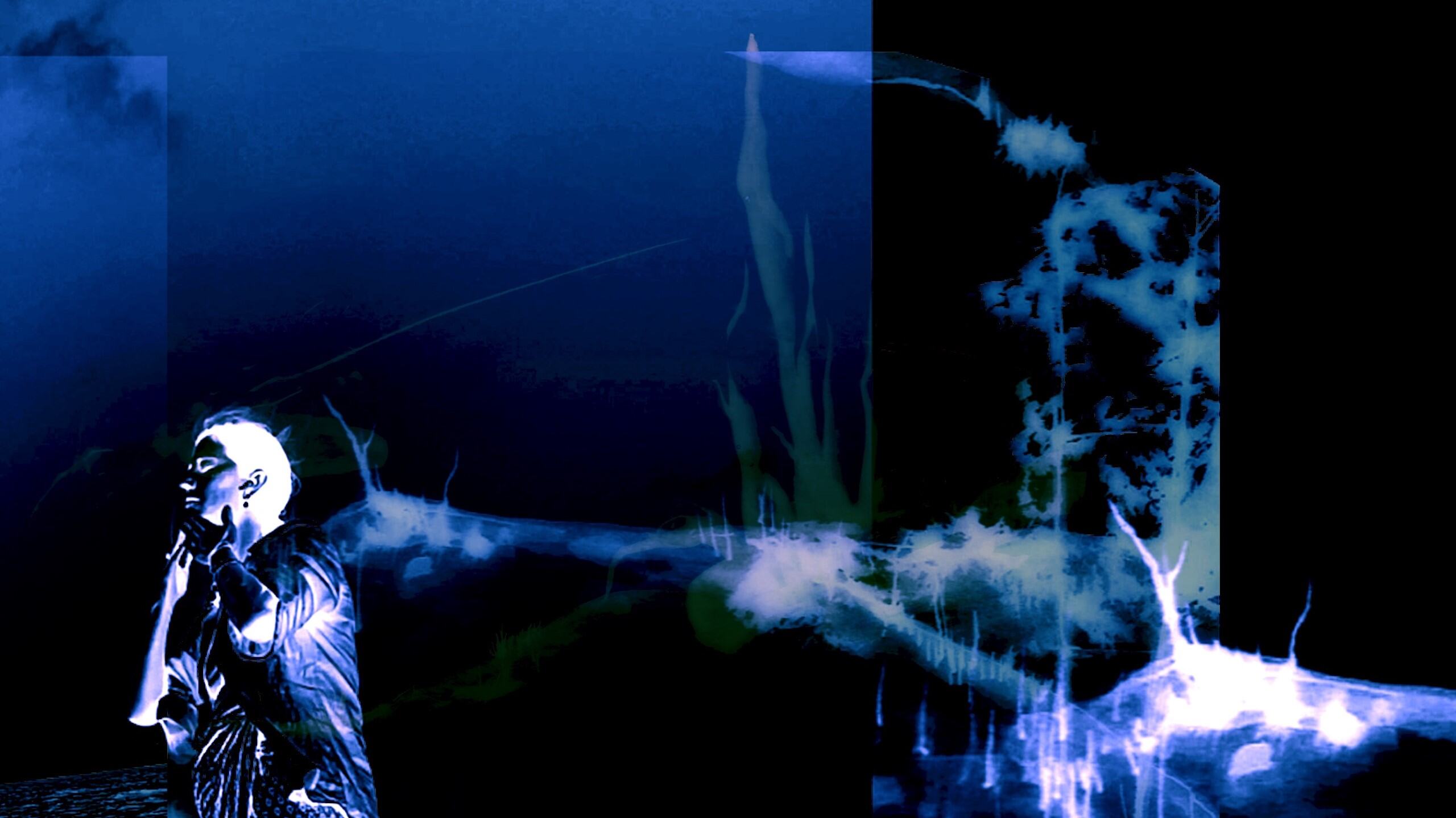

Evangeline Y Brooks: Blue Dot (Shadow), 2024

Interactive projection, lidar

Still from "Blue Dot (Shadow)", 2024

Blue Dot (Shadow) is an interactive text-based projection that tells the story of a robot trying to fit in on Earth. Viewers’ bodies interact with the text imagery, distorting and revealing words. The narrative is an adaptation of a short story originally titled “Transfer Student”, written by my sibling, Davy.

The story is about a robot, Nessie, who is sent to an Earth highschool. She begins to learn and mimic patterns. She’s good at math and loves museums, jazz, and helping with the dishes. She starts to repeat patterns of those around her that make her tired, sluggish, and sick. And as she begins to break down, she’s deemed broken, and sent back for repairs.

Davy's full story can be found at bluedot.persona.co/story (external link) .

Evangeline Y Brooks is a postcyber artist working to maintain sustainable, accessible, and DIY artist communities against cultures of immediacy. Her practice engages with digital imagery bridging IRL, passing data back and forth over mediums and celebrating lost information. She is the Programming Manager at InterAccess and co-hosts the A/V showcase ponyHAUS.

Ben McCarthy: what abides, 2024

Six videos with audio

15 minutes

hallucination detail (what abides), Ben McCarthy, 2024

A suite of thematically interconnected, roughly 2 minute minute videos exploring neural media, aesthetics and cosmology. These vignettes evidence my thinking-through and embedding in various machine learning tools including text- and image-to-image, -video, and -audio LLMs, and local RAVE neural network training. The work is contextualized in relation to historical subjectivity-altering technologies and techne– in particular the link between social stratification, information machines and my own captured hybridity within a deracinated, post-enlightenment, networked subjectivity.

Machine learning offers a new narrative about humanity as an emergent subjectivity– that part of it that can be datified. Language forms the infrastructure of our thought but is inadequate to rendering the real– questions of resolution, scale, and discerning signal from noise, not to mention its polysemantic qualities encumber language in relationship to ‘pure information’. Synthetic language, with its countless eyes and ears, fed by the exponential explosion of this datification of things, promises new ways of knowing, both human and nonhuman. A philosophical prominence accumulates around this emergent media and raises questions about the utility of the erstwhile consumer ‘individual’; of authorship; in which material forms understanding inheres; to what extent this is human, and what beyond solipsism underwrites a world view that centres the anthropos.

We need to be wary of tying our fates to technological promise or confusing novel products with progress. And yet, as we have seen, our evolving relationship to technology persistently alters what human means.

Out of need, we put an order to things, we make the chaos in which we are aswim a little more alluring, a little easier to look at. We do so now even as aesthetic modes and cosmologies recursively fractalize.

Ben McCarthy’s practice plays out a dichotomy between embodied and intellectual pleasure. He is drawn to the allure of sonic texture – the natural and synthetic sounds that attune and disorganize one’s perception. Through experiments in signal processing and emerging technology he seeks to arrange a sensual present that invites embodied attention. McCarthy works from the intuition that one’s experience of sound and voice is dense with personal and collective association. With sound, text, and documentary he thinks through the social and economic conditions that produce the listening subject. McCarthy is a Dora Award winning composer and his work has been presented across Canada, the US and Europe. He also co-organizes mainstream, a bi-monthly event for experimental music in Toronto.

Instagram: @paleeyesmusic (external link, opens in new window)

Omar Shabbar: Cloud Conversations, 2024

Opening night special performance.

Cloud Forest in Costa Rica, Omar Shabbar, May 2024

Cloud Conversations is a duet between guitar and AI that celebrates bird song. Trained on a wide range of bird song from the cloud forest regions of Costa Rica, this AI musical improvisor listens, reacts, and contributes to incoming musical dialogue, creating a musical duet with Omar. His composition for guitar is, in turn, designed to mimic the sounds of the bird song heard throughout his time in Costa Rica. This piece explores themes of natural vs synthetic, levels of creative agency and intelligence, and reciprocal influence of musical improvisation.

Omar Shabbar is a musician, researcher, sound artist, and audiophile based out of Toronto. Currently working towards a PhD in Digital Media at York University, Shabbar’s work explores expressive applications for new sound technologies through the creation of new instruments and sonic environments. As an active touring musician with two decades of gigging experience, Shabbar’s lifelong obsession with the guitar and live performance informs much of this creative process. His most recent work aims to look outwards, beyond conventional instruments, and focus on the role of the performance space as a co-creator. Moving past traditional performance spaces like churches or performance halls, this recent work focuses on community spaces, specifically outdoor performance spaces in Latin America. Shabbar’s work demonstrates how the sounds of these often overlooked spaces influence musicians and contribute to the overall performance.

To showcase Toronto as a place for learning art and AI we are also showcasing student groups from TMU:

Dione Almeida, Crystal Chan, Josephine Chan, Charis Chu

Envisioning Tomorrow: The Interactive Smart City Model for Toronto in 2050, 2024

Multimedia interactive installation with video, 3D printed sculptures and generative AI

Envisioning Tomorrow DALLE-3 generated and edited image, Josephine Chan, 2024

Dione Almeida, Crystal Chan, Josephine Chan, and Charis Chu present "Envisioning Tomorrow: The Interactive Smart City Model for Toronto in 2050," a project aimed at inspiring sustainable urban development through diverse perspectives. This initiative combines immersive storytelling with interactive technology to engage visitors in envisioning and driving positive change. Leveraging live Generative AI, the model illustrates how individual actions can shape the future of the city. Developed using Python and Generative AI, this project is designed for policymakers, creatives, architects, and responsible citizens, fostering a shared vision for a sustainable and innovative urban future.

Jahnoya Cole, Harshita Jain, Sarah Morassutti, Lee Radovitzky

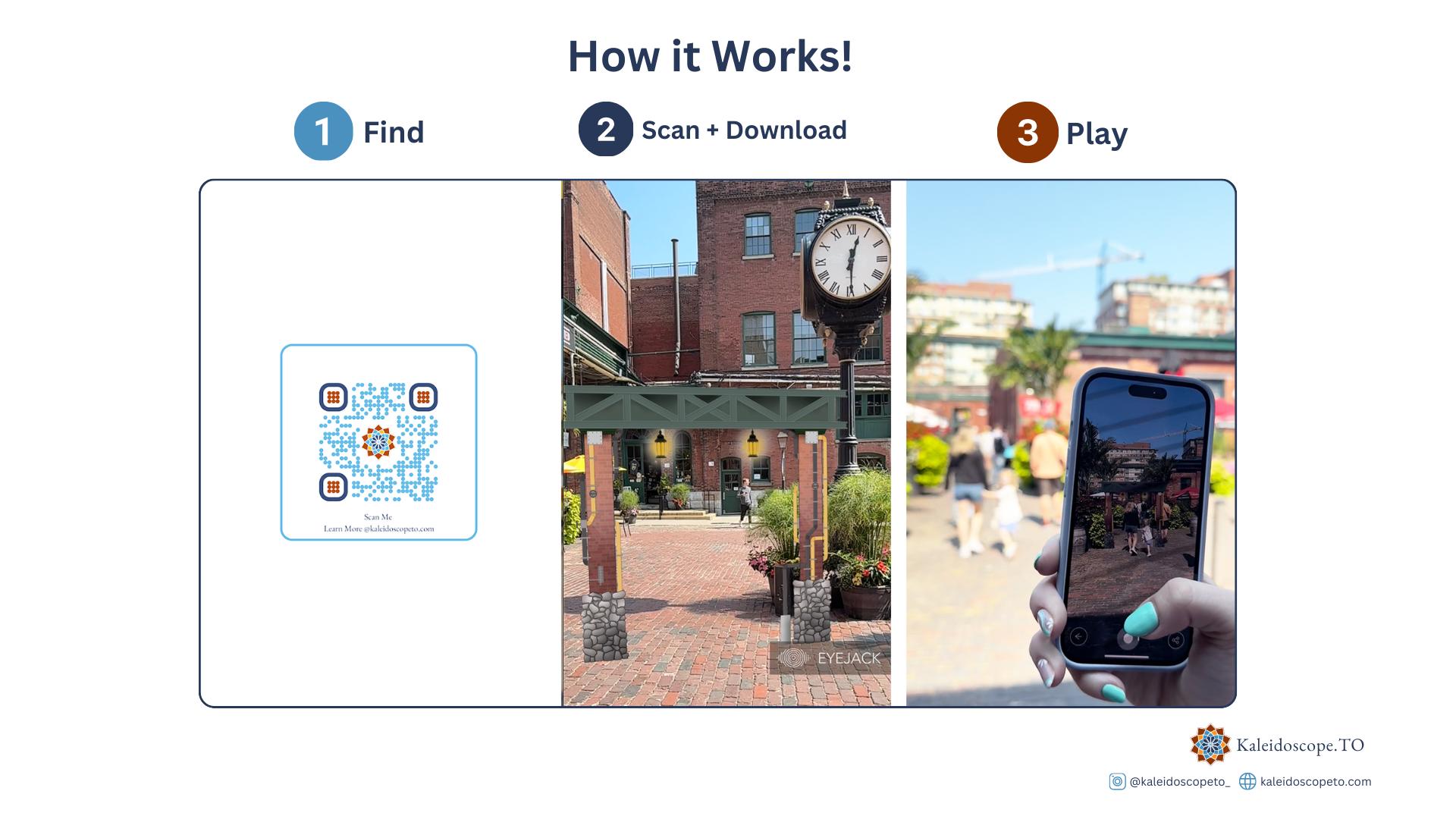

Kaleidoscope.TO, 2024

Augmented reality application, video

Experience Toronto like never before with Kaleidoscope.TO! Join our augmented reality scavenger hunt for an unparalleled adventure that lets you rediscover the city through a new lens.

Our mission is to inspire Torontonians to slow down, appreciate their surroundings, and look beyond the scope to reconnect with places you think you already know.

To learn more about our mission, check us out at @kaleidoscopeto_ (external link)

This project, Kaleidoscope.TO, created by Jahnoya Cole, Harshita Jain, Sarah Morassutti, and Lee Radovitzky, introduces an interactive city-wide scavenger hunt experience that aims to revolutionize the exploration of Toronto’s key markets by utilizing AR, interactive web and video, and QR code technology. Targeted towards Gen Z users, this scavenger hunt seeks to highlight new perspectives and change the way tourism is viewed through the inclusion of innovative technology.

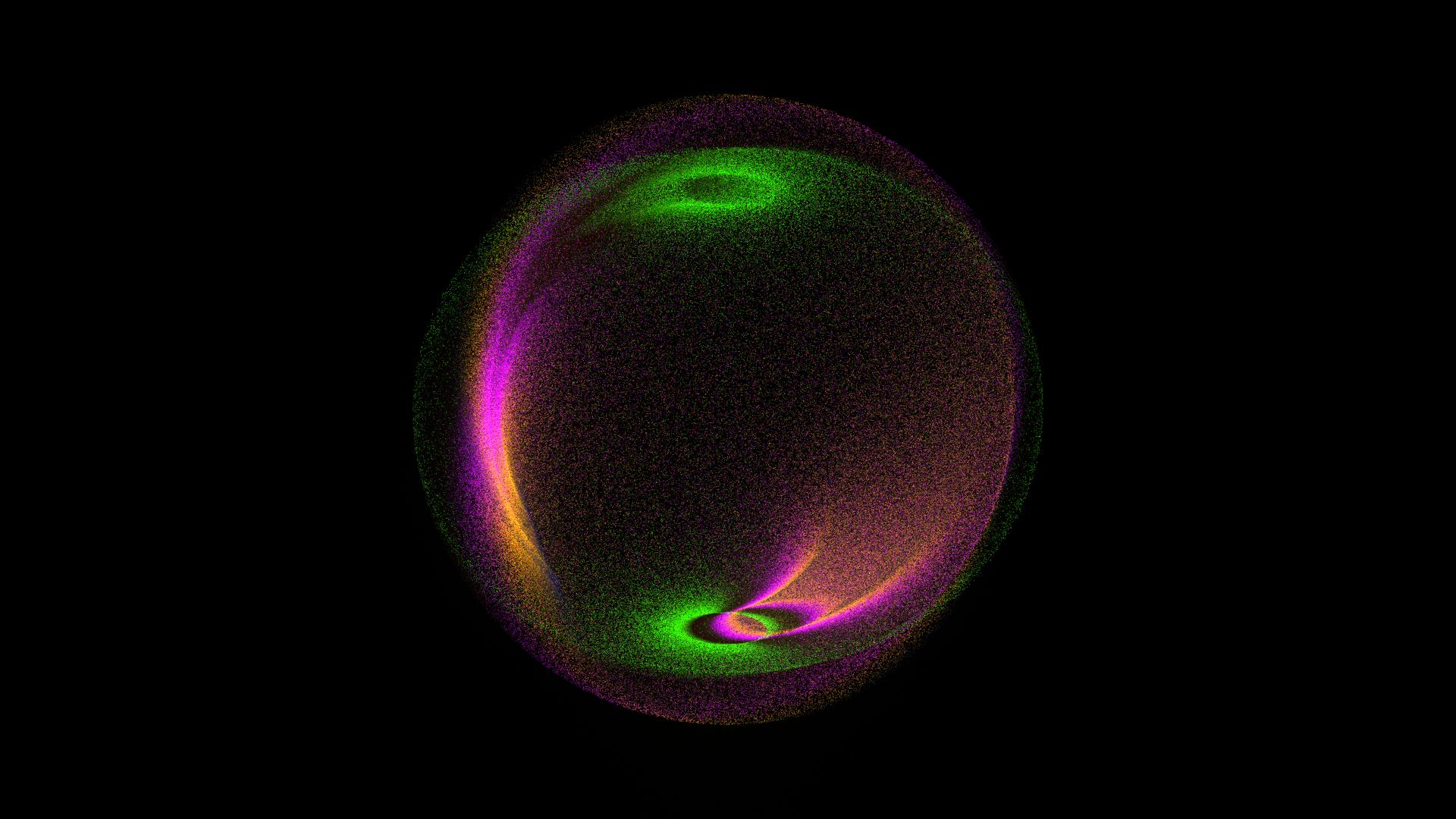

Ryan Allen-Hallam, Aashana Dhingra, Sujay Rambajue, Maykel Shehata

The Echo Wave Project, 2024

Still image from visualization of EEG brainwaves depicting an individual's state of relaxation and interest, The Echo Wave Project, 2024

The Echo Wave Project is an immersive art installation that explores the intersection of technology, art, and mental health. Using EEG technology, it visualizes mental reflections as abstract art, fostering dialogue around mental health and promoting inclusivity. Real-time EEG data from the EMOTIV INSIGHT headset is integrated into TouchDesigner to control a dynamic particle system, creating a unique visual experience for each participant. The visualizations are influenced by colour therapy principles and align with emotional states such as stress, relaxation, and excitement. The project demonstrates how EEG-driven art can enhance user engagement and foster empathy, offering innovative insights into data-driven art and mental well-being.

Our work is driven by a fascination with the unseen dimensions of human experience—particularly the mind. The Echo Wave Project aims to visualize the inner world of thoughts and emotions, using EEG technology as a bridge between the intangible and the tangible. By transforming brainwave data into dynamic abstract art, we invite viewers to reflect on their mental states and connect with their inner selves.

The inspiration for this project stems from our interest in mental health and the need for open conversations around it. We believe that art can act as a powerful medium for empathy and understanding. By integrating colour therapy principles, the installation responds in real-time to emotional shifts, using colour and movement to create a unique, immersive visual experience that represents the emotions and thoughts of each participant.

Our creative process blends technology with artistic intuition. We utilize the EMOTIV INSIGHT headset to collect brainwave data, which is then interpreted through TouchDesigner, a visual programming platform. The result is an evolving particle system that represents an individual's mental reflections in real-time.

Through this work, we hope to reveal the inherent beauty of mental health when it is given space for expression and foster a deeper understanding of ourselves and one another through the fusion of art and technology.